BIG DATA TECHNOLOGY LAB

|

SL.NO |

TOPIC |

PAGE NO |

|

1 |

1. Implement

the following Data Structures a) Linked List b) Stacks c) Queues d) Set e) Map

2. Web Monitoring

Tools for Hadoop Setup |

|

|

2 |

Hadoop Eco-System |

|

|

3 |

Hadoop Architecture |

|

|

4 |

Hadoop

Deployment Methods a) Standalone Mode b) Psuedo Distributed Mode c) Fully

Distributed Mode |

|

|

5 |

Verifying Hadoop In Local System |

|

|

6 |

Implement the following file management tasks

in Hadoop: a)

Adding files and directories b)

Retrieving files c)

Deleting files |

|

|

7 |

HDFS architecture |

|

|

8 |

Hadoop–Map

Reduce architecture |

|

|

9 |

MapReduce

word count example |

|

|

10 |

Architecture

of YARN |

|

Web Monitoring Tools for Hadoop Setup

Hadoop monitoring tools:

Datadog

– Cloud monitoring software with a customizable Hadoop dashboard, integrations,

alerts, and more.

LogicMonitor – Infrastructure monitoring software with a

HadoopPackage, REST API, alerts, reports, dashboards, and more.

Dynatrace

– Application performance management software with Hadoop monitoring with

NameNode/DataNode metrics, dashboards, analytics,, custom alerts, and more.

Apache Ambari - The Apache Ambari project aims to make Hadoop

cluster management easier by creating software for provisioning, managing, and

monitoring Apache Hadoop clusters. It is a great tool not only for

administering your cluster, but for monitoring, too.

Cloudera Manager

Cloudera Manager, is a cluster management method that

ships as part of the Cloudera Hadoop commercial distribution, but is also

available as a free download.

-----------------------------------------------------------------------------------------------------------------------------

Hadoop Deployment Methods

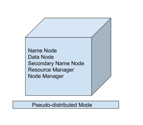

2.Pseudo-Distributed Mode – It is also called a single node cluster where

both NameNode and DataNode resides in the same machine. All the daemons namely

NameNode, DataNode, SecondaryNameNode, JobTracker, TaskTracker etc run on the

same machine in this mode. It produces a fully functioning cluster on a single

machine.

only the single node

set up so all the Master and Slave processes are handled by the single system.

Namenode and Resource Manager are used as Master and Datanode and Node Manager

is used as a slave. A secondary name node is also used as a Master. The purpose

of the Secondary Name node is to just keep the hourly based backup of the Name

node. In this Mode, We need to change the configuration files mapred-site.xml, core-site.xml, hdfs-site.xml for setting up the environment.

3.Fully Distributed Mode – Hadoop runs on multiple nodes wherein there

are separate nodes for master and slave daemons. The data is distributed among

a cluster of machines providing a production environment. the daemons NameNode,

JobTracker, SecondaryNameNode (Optional and can be run on a separate node) run

on the Master Node. The daemons DataNode and TaskTracker run on the Slave Node.

Once you download

the Hadoop in a tar file format or zip file format then you install it in your

system and you run all the processes in a single system but here in the fully

distributed mode we are extracting this tar or zip file to each of the nodes in

the Hadoop cluster and then we are using a particular node for a particular

process. Once you distribute the process among the nodes then you’ll define

which nodes are working as a master or which one of them is working as a slave.

Comments

Post a Comment